When I was in my second year of university, one of my lecturers was very fond of reminding us about the importance of code quality and standards. "In the real world", we were told, "in the industry", you won't be able to get away with uninformative variable names. You will be shunned if you don't comment your code properly. Our assignments in that unit had to be perfect - no using int i=0; for a loop, it had to be int index=0;. Preparing us for when we left the nest, spread our wings and went out in to the wide open world.

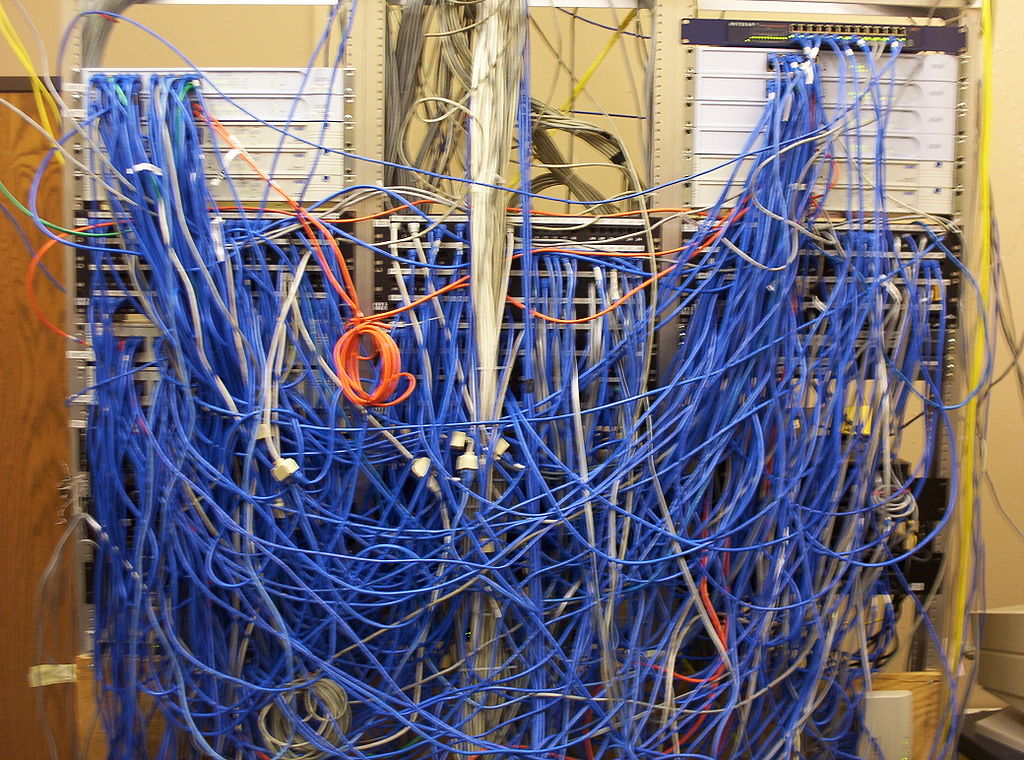

Of course, that was just a fantasy. What really happens is that you graduate from university and go to a job at an established company. You spend your first two or three weeks going through the codebase, familiarising yourself with how it all fits together. The code is an absolute mess, naturally. Fifteen years of rapid, agile development has conspired to create the monolithic mess you see.

Most junior developers will probably baulk at the messiness of the codebase they've "inherited", and attempt to set it right. A noble cause, really, but ultimately futile. I've been there and done it myself. Hours and hours have been wasted re-writing, refactoring, and modernising code that has been working for the better part of a decade. Yeah, it's hard to maintain, but it works. Over time, the developer stops being a junior developer intent on shaping the world in their own image, and becomes a senior developer who knows which battles are worth fighting.

Learning how to mould your styles to the style of the existing codebase is a crucial skill for any developer. At almost every job you take in your career, you'll be adopting a terrible, shitty codebase held together with duct tape, and that's just part of the job. This isn't to say that change is impossible, it just takes a long time.